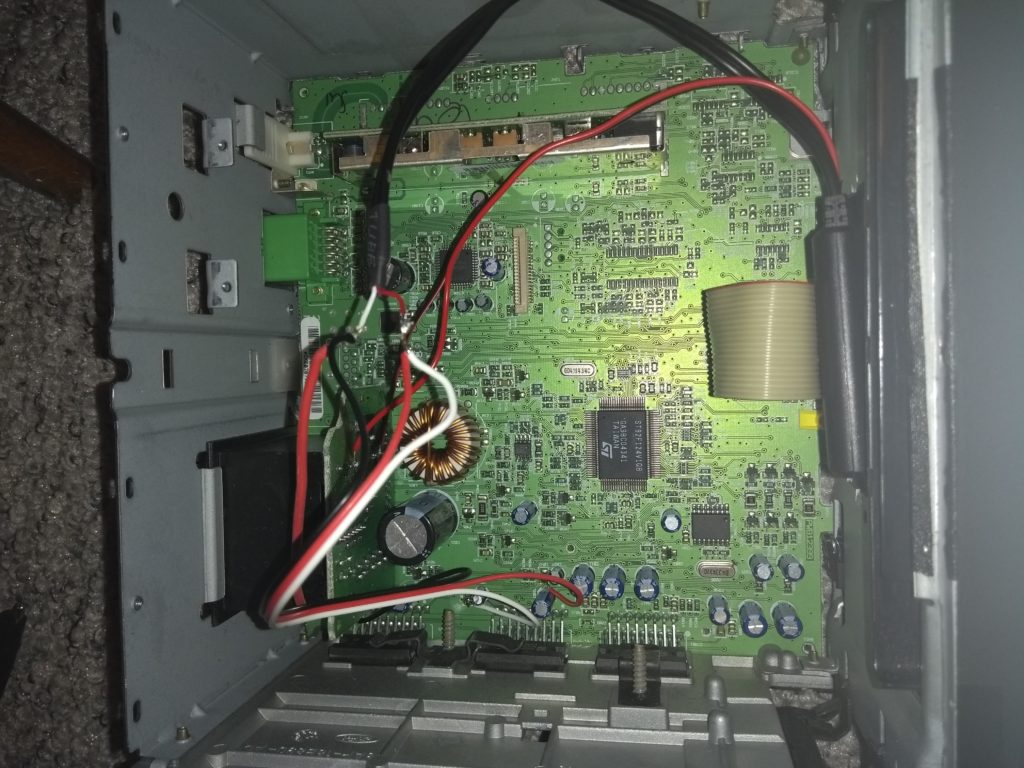

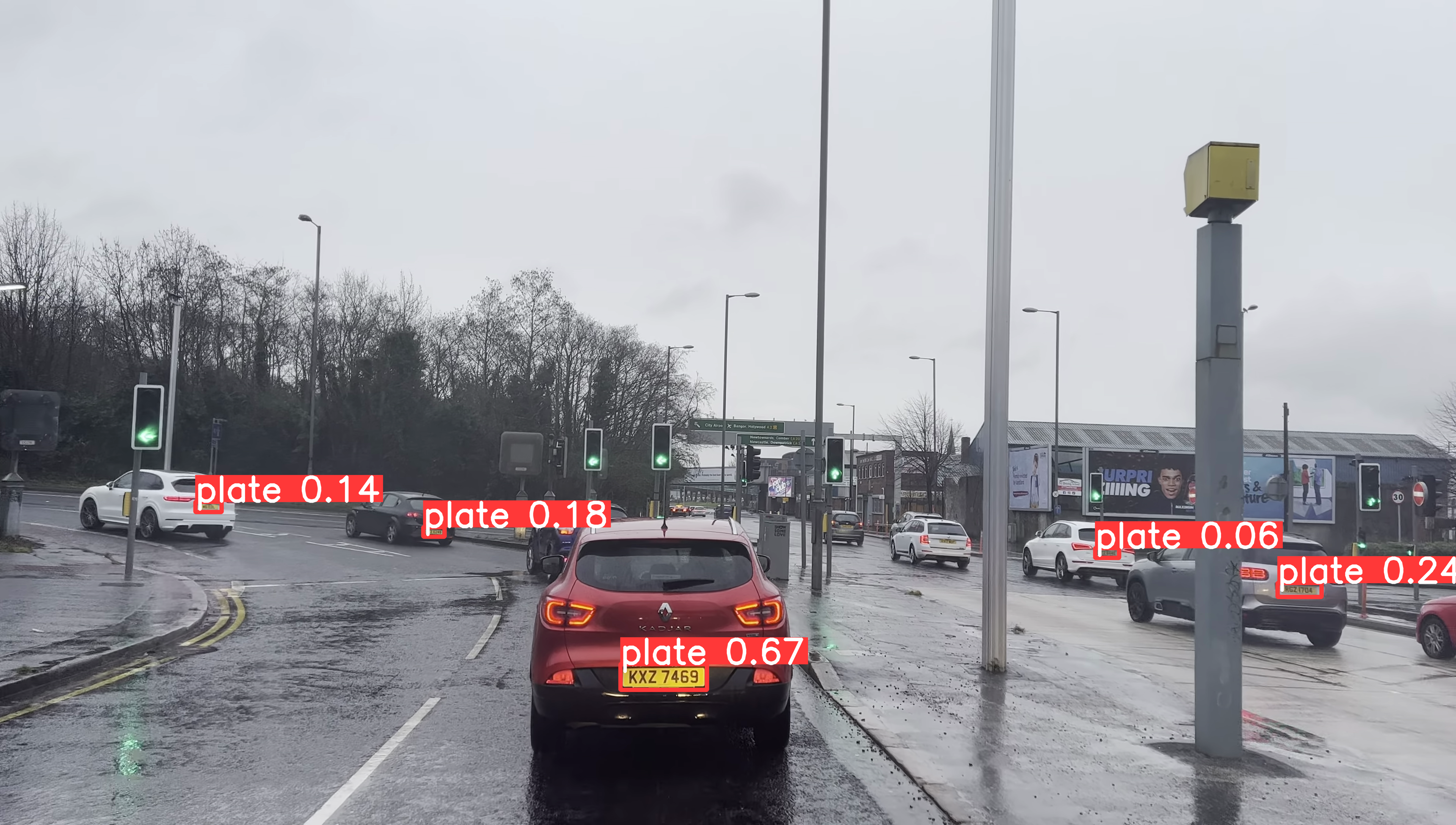

I have been playing with Yolov8 for about 3 weeks now, I have been curating a British number plate tracking dataset. Using videos from youtube I trained a basic model and used this to help me label subsequent datasets. This takes the pain out of labeling every image manually. I have been using labelImg, anylabeling, and python scripts to extract the annotation section and view plates and perfect the dataset. This is the result so far which I am surprised how accurate it is. I have had to use a small batch size of 3 because I am training on 4k resolution images using a rtx3090.

as you can see above it still makes errors however, this was when I turned the confidence down to 0.02 to allow for more training data labels to be created, I then manually delete the false positives predictions by hand, which are not to numerous. This is easier than during the box labels.

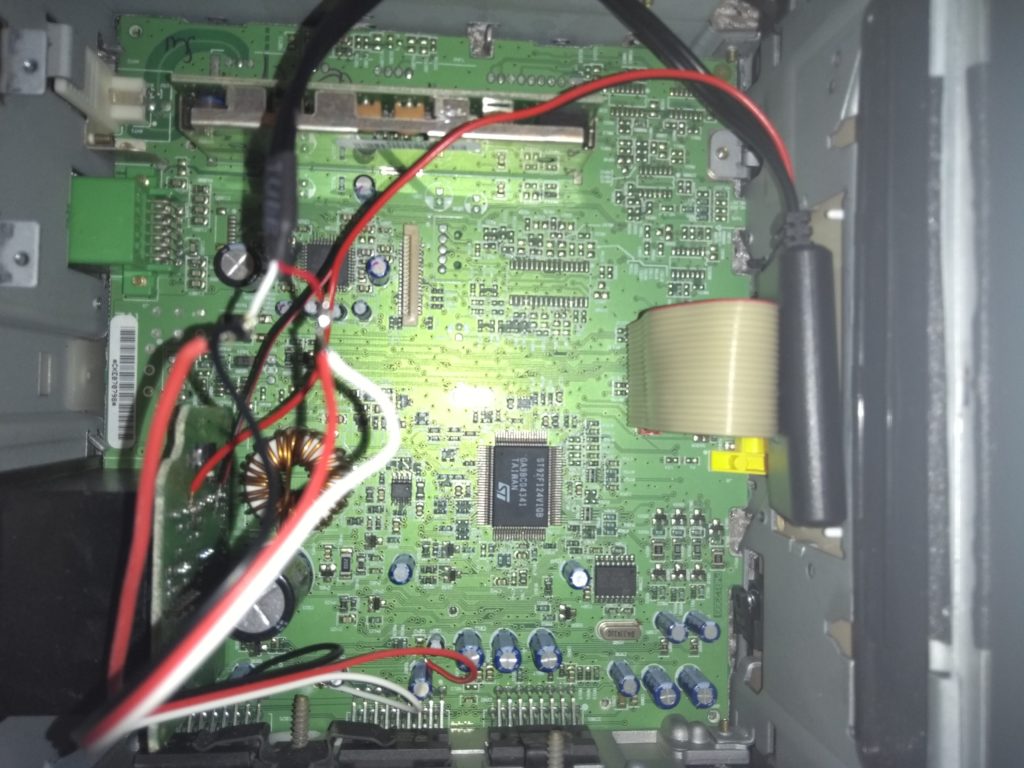

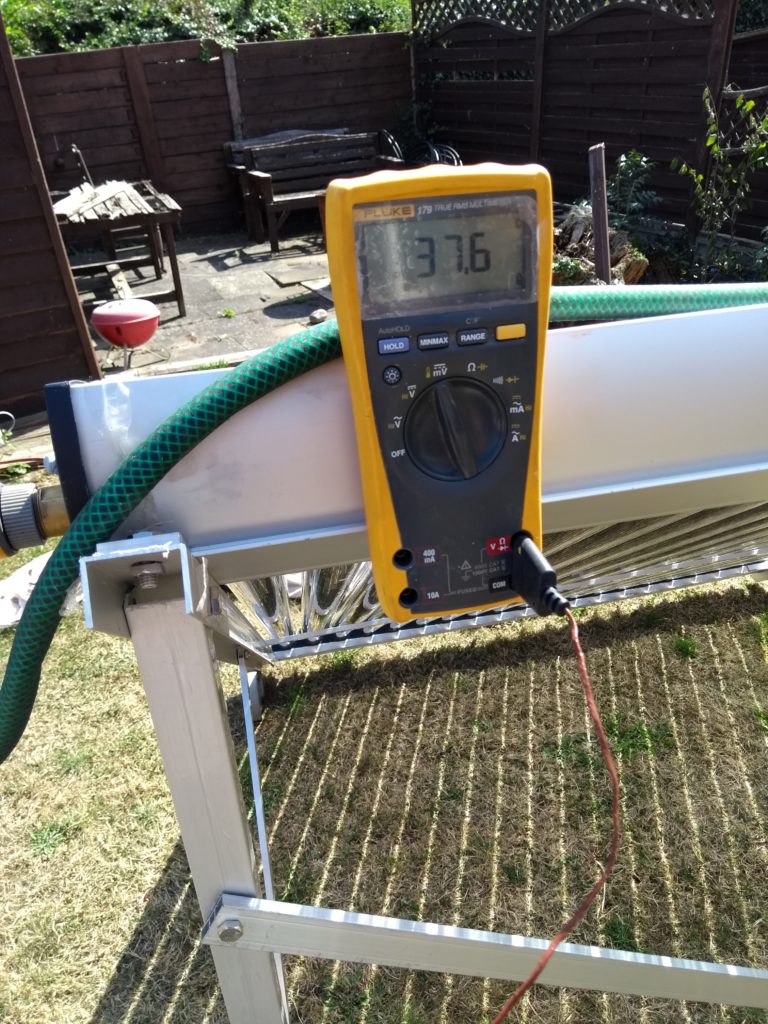

Here is the result of training a segmentation model yolov8n.pt on the data set that I have. For most characters I am getting good recognition results. in the 60% yo 70% except for letters that have ambiguity like B and 8 and D and 0 as these are very alike. I have about 10 of each letter in the training data. I will see what results I get by adding more examples.